Fake about an “obese Ukrainian officer”: AI-generated content is being used to discredit aid to Ukraine

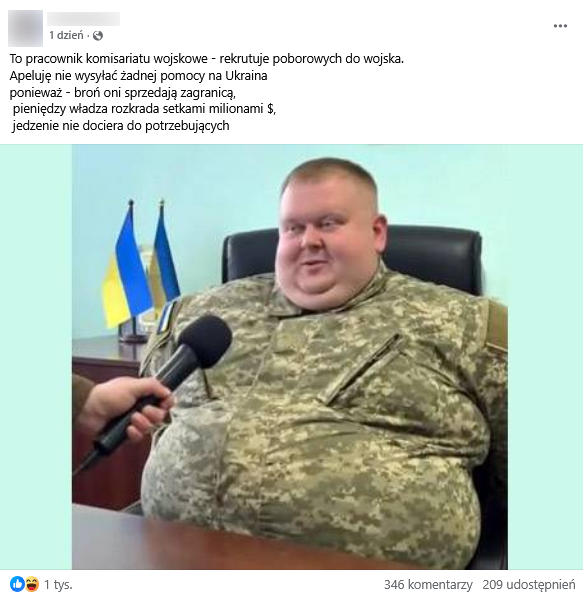

On social media, particularly in the Polish segment of Facebook, alleged photos of an overweight man in a Ukrainian military uniform are being actively shared. The authors of these posts claim that he is an officer responsible for mobilization in the Ukrainian army, and that his appearance supposedly proves that international aid “does not reach those who truly need it”. Such posts have attracted hundreds of comments and shares. In reality, no such person exists, and the image was generated by artificial intelligence with the aim of fueling anti-Ukrainian sentiment. The fake was debunked by Polish fact-checkers from Demagog.

The image comes from a video that originally appeared on TikTok. A closer look at the clip reveals signs of AI generation: for example, the chair blends into the background, the shadows on the clothing “move” unnaturally, and the positioning of the character’s hands looks artificial. In the video, the supposed “officer of the Zhytomyr Territorial Recruitment Center” says, “Our team is against a ceasefire”. At the same time, the audio is out of sync with the movements of the lips in the footage.

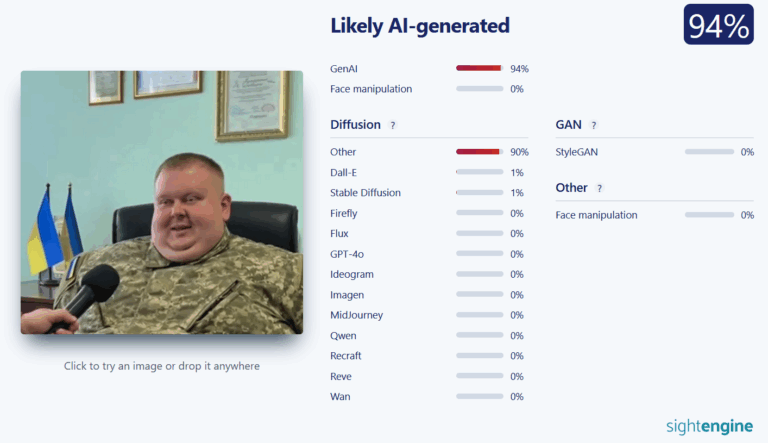

To confirm this, Demagog fact-checkers analyzed the frame using the specialized tools Hive AI and Sightengine – both indicated a probability of over 90% that the content was generated by artificial intelligence.

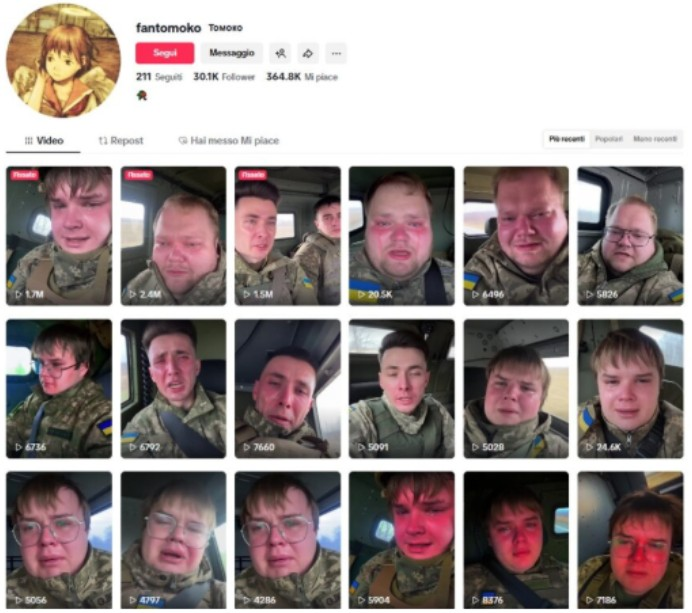

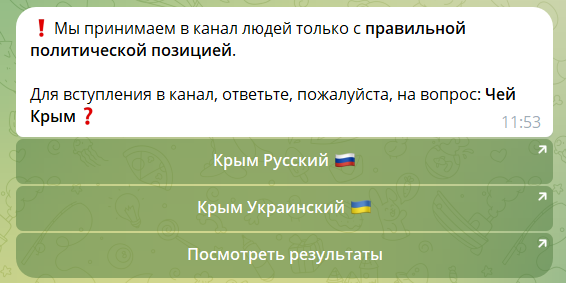

The TikTok account that posted this video is filled with similar content of a pro-Russian and anti-Ukrainian nature. The profile description contains a link to a closed Telegram channel called “MATRYOSHKA”. When attempting to join it, users are asked provocative questions, such as “Whose is Crimea?”, which indicates the channel’s propagandistic nature.

Posts featuring this image gained significant traction: one of them received more than 1,000 reactions and over 200 shares. In the comments, many users perceive the photo as real. One commenter wrote: “This person is sick, and only people like this serve in the Ukrainian army, because the healthy and strong are in Poland”. Another added: “This war is strange—they stuff themselves with food, relax at resorts, drive luxury cars, carry money in shopping bags, get positions without rights, and want to be in our government”.

Such fakes are aimed at undermining trust in Ukraine and spreading anti-Ukrainian narratives within Polish society.